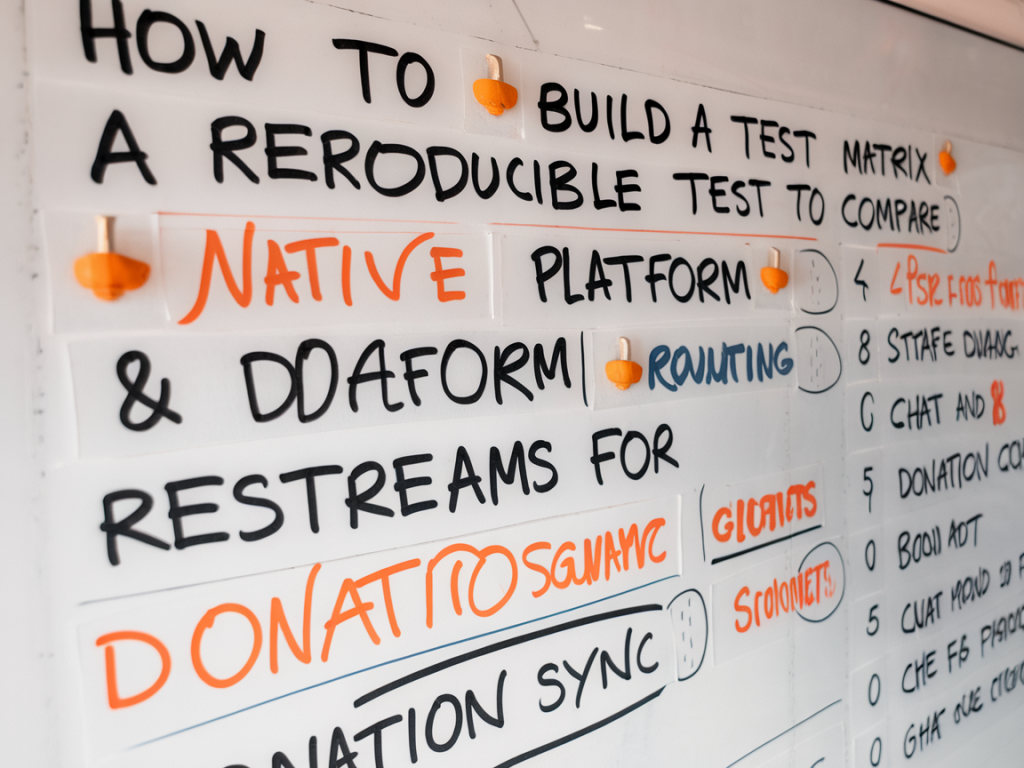

I’ve run a lot of experiments where the difference between “works” and “works reliably” comes down to how reproducible your tests are. When you’re comparing a routing layer like restream.io to native platform streams for things like chat and donation synchronization, you’re not just measuring video quality — you’re measuring event delivery, ordering, latency, and failure modes. Below I walk through a test matrix I use to make those comparisons repeatable and actionable.

Why you need a reproducible test matrix

Ad-hoc testing with a few test streams and random donations will tell you something, but it won’t tell you why or how to fix it. A reproducible matrix forces you to define variables (what you change), controls (what you keep the same) and metrics (what you measure). That structure makes root-cause analysis possible and helps you compare restream.io routing versus native platform streams on consistent terms.

Core goals and metrics

Set clear goals before you begin. For chat and donation sync I typically track:

- Event latency: time between an action on the source platform and the event arriving at the consumer (e.g., broadcaster overlay, bot, third-party dashboard).

- Event ordering: whether events arrive in the same order they were created.

- Event loss rate: percent of events never delivered or delivered with missing metadata (e.g., donor name, amount).

- Duplication rate: how often events are emitted more than once.

- Rate-limit and throttling behavior: how systems degrade under high event volume.

- Error modes and recovery: how reconnection and backfill behave after interruptions.

Variables to include in the matrix

Keep the matrix comprehensive but manageable — I recommend prioritizing variables that impact routing and delivery:

- Stream origin: native platform stream (Twitch ingest) vs restream.io relay.

- Platform endpoints: Twitch, YouTube, Facebook — each platform’s chat/donation APIs behave differently.

- Relay vs direct integration: restream may re-author and re-inject events; native uses platform webhooks and chat APIs directly.

- Client type: OBS with local bot, cloud bot (Heroku / AWS Lambda), or a hosted overlay provider (StreamElements/Streamlabs).

- Event type: chat message, cheer/sub/donation, channel point redemptions, custom tipping provider (e.g., Streamlabs PayPal vs third-party services).

- Network conditions: low latency, simulated packet loss, high latency (use a network shaping tool like clumsy or tc).

- Concurrency: single event vs burst (50–200 events/min).

Designing test cases

I build test cases that map variables to measurable outcomes. Examples:

- Baseline: native Twitch stream → native Twitch chat + donation provider. Low latency network. Single events every 30s for 10 minutes.

- Relay test: source stream → restream.io → Twitch. Same donation provider and overlay. Repeat baseline cadence.

- Burst test: 200 chat messages and 20 donation events in a 60s window, comparing restream vs native under stress.

- Network degradation: introduce 5% packet loss and 200ms RTT to measure backfill and ordering differences.

- Reconnect test: kill and restore the producer stream mid-run to observe how events are replayed or lost.

- Cross-platform sync: same source routed to Twitch + YouTube and check cross-platform ordering for multi-platform bots.

Instrumentation and automation

Manual testing is fine for discovery, but reproducibility requires automation. My typical stack:

- Scripting event generation: use bots or scripts to send chat messages and trigger donations. For Twitch chat, tmi.js or TwitchJS; for YouTube, the LiveChat REST API or the Puppeteer approach for simulated chat. For donations, use test endpoints or sandbox modes where available (Streamlabs has a testing API).

- Time sync: make sure all machines have accurate NTP. I add a monotonic timestamp to each test event payload so that latency measurement isn’t thrown off by clock drift.

- Logging and collection: centralize event logs using a lightweight collector (Fluentd, Logstash, or a simple Node service writing to JSON files). Capture raw platform webhook payloads, bot logs, and overlay receipts.

- Network shaping: use tc (Linux) or clumsy (Windows) to simulate packet loss, jitter, and latency. Automate profiles so each run uses the same conditions.

- Orchestration: use a script (bash, Python) or a small CI job to kick off test runs in sequence and mark metadata (test ID, timestamp, variables) so data maps back to the matrix rows.

Sample test matrix table

| Test ID | Stream Path | Platform(s) | Network | Event Type | Concurrency | Expected Obs |

|---|---|---|---|---|---|---|

| T001 | Native → Twitch | Twitch | None | Chat/Donation | Low | Low latency, ordered, no loss |

| T002 | restream.io → Twitch | Twitch | None | Chat/Donation | Low | Compare latency, check metadata integrity |

| T003 | Native → Twitch + YouTube | Twitch, YouTube | 5% loss | Chat | Burst | Ordering, dupes, backfill behavior |

| T004 | restream.io to multi | Twitch, YouTube | 200ms RTT | Donation | Burst | Event duplication & reconciliation |

How I measure and analyze results

For each run I capture:

- Source timestamp and event ID (from generator).

- Arrival timestamp at each consumer (overlay, bot, webhook endpoint).

- Full payloads so I can inspect metadata differences (e.g., currency formatting, name sanitization).

- Platform-side logs if accessible (e.g., Twitch EventSub delivery logs).

Analysis is straightforward: compute latency distributions, count missing/duplicate IDs, and visualize with simple plots (I use Python/matplotlib or Google Sheets). Pay attention to the 95th percentile latency and tail behavior — median alone hides intermittent delays that impact UX.

Common pitfalls and how I avoid them

Some things that bite tests if you don’t control for them:

- Rate limiting: Platforms may silently drop messages or delay EventSub deliveries under heavy load. I record HTTP status codes and delivery headers to correlate drops with rate limits.

- Event normalization: restream.io or overlays sometimes transform payloads (names lowercased, currency standardized). Include payload checks to detect these changes.

- Clock skew: without strict time sync your latency numbers are meaningless. I always validate NTP sync and use monotonic counters where possible.

- Third-party throttles: donation processors may queue transactions or block test payments. Use sandbox/test modes and document provider behavior.

- Human factors: reduce manual steps — scripted runs reduce operator error and make runs repeatable.

Interpreting trade-offs

From tests I’ve run, restream-style relays can simplify multi-destination encoding and reduce upstream bandwidth, but they add an additional network and processing hop — which can affect latency and occasionally reorder events during retries. Native platform streams generally give you the most direct route for chat and donations, but they make multi-platform sync and cross-platform overlays more complex.

What you value determines the winner: if sub-second chat latency and native EventSub reliability are critical, native might be better. If you need one encoder to feed many platforms and can tolerate tens or hundreds of milliseconds of additional latency, a routing layer shines. Your test matrix will show that trade-off quantitatively.

Next steps and reproducibility checklist

- Document every test run (test ID, git commit for scripts, network profile).

- Store raw logs and parsed outputs in a versioned location (S3 or a repo of JSON files).

- Automate periodic regression runs — small changes in platform behavior can appear over weeks.

- Share the matrix with engineers and creators so everyone understands the constraints and the measurable impact of routing choices.

If you’d like, I can share a starter repository with the scripts and templates I use to run these comparisons. I’ve found that once you have a reproducible harness, moving from hypothesis to actionable change gets a lot faster — and your decisions stop being “it felt like” and become “the data says.”